There may be plenty of fervor within the website positioning trade for Python proper now.

It’s a comparably simpler programming language to study and has turn out to be accessible to the website positioning group via guides and blogs.

However if you wish to study a brand new language for analyzing and visualizing your search knowledge, think about trying into R.

This text covers the fundamentals of how one can produce time sequence forecasts in RStudio out of your Google Search Console click on knowledge.

However first, what’s R?

R is “a language and atmosphere for statistical computing and graphics,” in line with The R Undertaking for Statistical Computing.

R isn’t new and has been round since 1993. Nonetheless, studying among the fundamentals of R – together with how one can work together with Google’s varied APIs – will be advantageous for SEOs.

If you wish to decide up R as a brand new language, good programs to study from are:

However for those who grasp the fundamentals and wish to study knowledge visualization fundamentals in R, I like to recommend Coursera’s guided venture, Software of Knowledge Evaluation in Enterprise with R Programming.

And you then additionally want to put in:

What follows are the steps for creating visitors forecasting fashions in RStudio utilizing click on knowledge.

Step 1: Put together the information

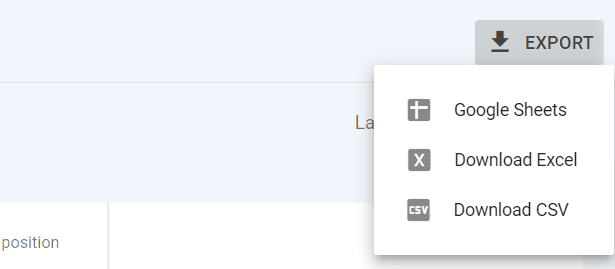

Step one is to export your Google Search Console knowledge. You’ll be able to both do that via the consumer interface and exporting knowledge as a CSV:

Or, if you wish to pull your knowledge through RStudio immediately from the Google Search Console API, I like to recommend you observe this information from JC Chouinard.

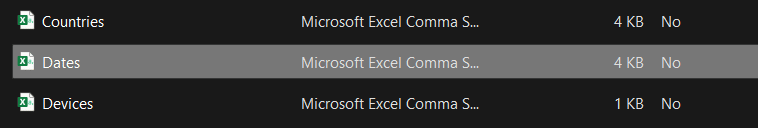

If you happen to do that through the interface, you’ll obtain a zipper file with varied CSVs, from which you need the workbook named “Dates”:

Your date vary will be from 1 / 4, six months, or 12 months – all that issues is that you’ve the values in chronological order, which this export simply produces. (You simply have to kind Column A, so the oldest values are on the high.)

Get the each day e-newsletter search entrepreneurs depend on.

Step 2: Plot the time sequence knowledge in RStudio

Now we have to import and plot our knowledge. To do that, we should first set up 4 packages after which load them.

The primary command to run is:

## Set up packagesset up.packages("tidyverse")

set up.packages("tsibble")

set up.packages("fabletools")

set up.packages("bsts")

Adopted by:

## Load packageslibrary("tidyverse")

library("tsibble")

library("fabletools")

library("bsts")

You then wish to import your knowledge. The one change it’s essential make to the beneath command is the file sort title (sustaining the CSV extension) in crimson:

## Learn knowledgemdat <- read_csv("instance knowledge csv.csv",

col_types = cols(Date = col_date(format = "%d/%m/%Y")))

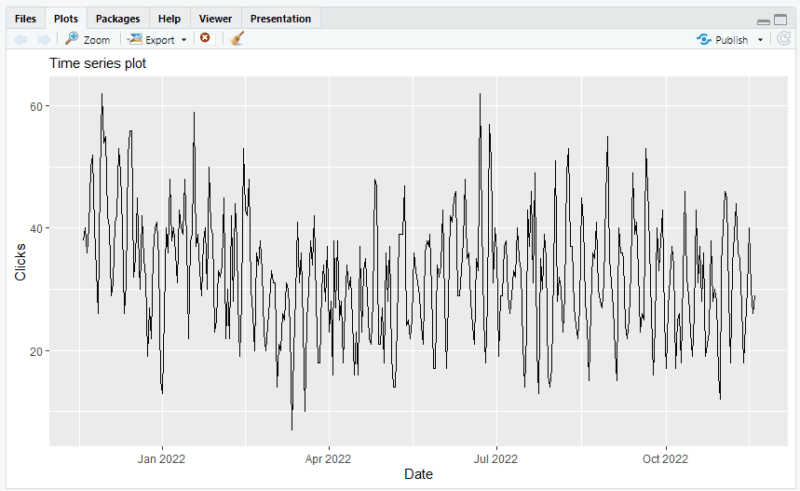

Then the final two instructions in plotting your knowledge are to make the time sequence the thing, then to plot the graph itself:

## Make time sequence objectts_data <- mdat %>%

as_tsibble(index = "Date")

Adopted by:

## Make plotautoplot(ts_data) +

labs(x = "Date", subtitle = "Time sequence plot")

And in your RStudio interface, you’ll have a time sequence plot seem:

Step 3: Mannequin and forecast your knowledge in RStudio

At this stage, it’s vital to acknowledge that forecasting will not be an actual science and depends on a number of truths and assumptions. These being:

- Assumptions that historic traits and patterns shall proceed to copy with various levels over time.

- Forecasting will comprise errors and anomalies as a result of your knowledge set (your real-world clicks knowledge) will comprise anomalies that could possibly be construed as errors.

- Forecasts usually revolve across the common, making group forecasts extra dependable than working a sequence of micro-forecasts.

- Shorter-range forecasting is usually extra correct than longer-range forecasting.

With this out of the best way, we will start to mannequin and forecast our visitors knowledge.

For this text, I’ll visualize our knowledge as a Bayesian Structural Time Sequence (BSTS) forecast, one of many packages we put in earlier. This graph is utilized by most forecasting strategies.

Most entrepreneurs may have seen or at the least be conversant in the mannequin as it’s generally used throughout many industries for forecasting functions.

The primary command we have to run is to make our knowledge match the BSTS mannequin:

ss <- AddLocalLinearTrend(checklist(), ts_data$Clicks)

ss <- AddSeasonal(ss, ts_data$Clicks, nseasons = 52)

model1 <- bsts(ts_data$Clicks,

state.specification = ss,

niter = 500)

After which plot the mannequin elements:

plot(model1, "comp")

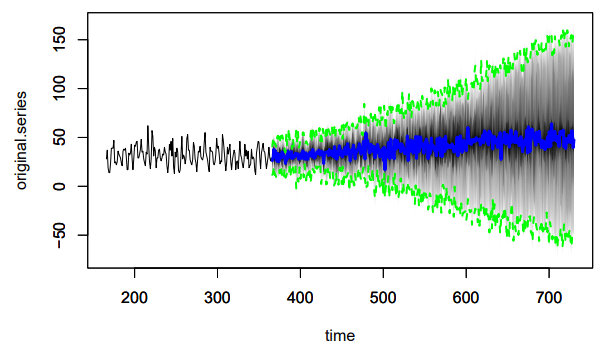

And now we will visualize one- and two-year forecasts.

Going again to the beforehand talked about basic forecasting guidelines, the additional into the long run you forecast, the much less correct it turns into. Thus, I stick to 2 years when doing this.

And as BSTS considers an higher and decrease sure, it additionally turns into fairly pointless previous a sure level.

The beneath command will produce a one-year future BSTS forecast in your knowledge:

# 1-year

pred1 <- predict(model1, horizon = 365)

plot(pred1, plot.authentic = 200)

And also you’ll return a graph like this:

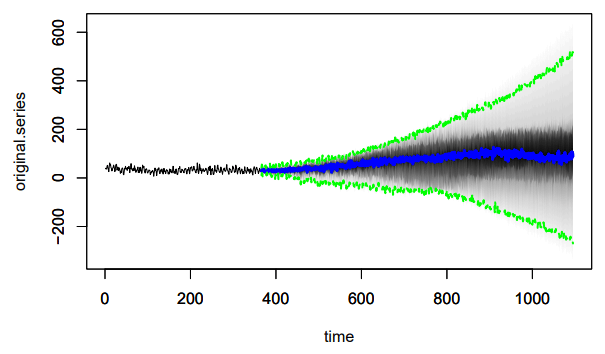

To provide a two-year forecasting graph out of your knowledge, you wish to run the beneath command:

pred2 <- predict(model1, horizon = 365*2)

plot(pred2, plot.authentic = 365)

And this can produce a graph like this:

As you possibly can see, the higher and decrease bounds within the one-year forecast had a spread of -50 to +150, whereas the 2-year forecast has -200 to +600.

The additional into the long run you forecast, the higher this vary turns into and, for my part, the much less helpful the forecast turns into.

Opinions expressed on this article are these of the visitor writer and never essentially Search Engine Land. Workers authors are listed right here.

New on Search Engine Land